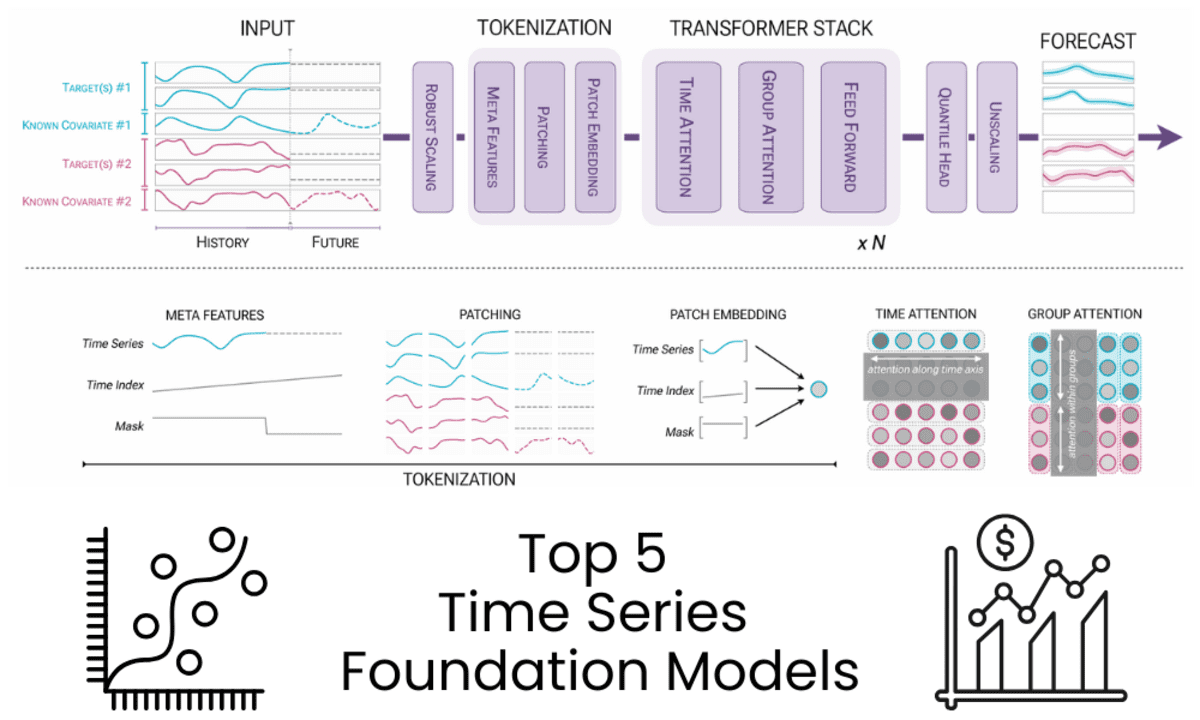

Image by Author | Diagram from Chronos-2: From Univariate to Universal Forecasting

Introduction

Foundation models did not begin with ChatGPT. Long before large language models became popular, pretrained models were already driving progress in computer vision and natural language processing, including image segmentation, classification, and text understanding.

The same approach is now reshaping time series forecasting. Instead of building and tuning a separate model for each dataset, time series foundation models are pretrained on large and diverse collections of temporal data. They can deliver strong zero-shot forecasting performance across domains, frequencies, and horizons, often matching deep learning models that require hours of training using only historical data as input.

If you are still relying primarily on classical statistical methods or single-dataset deep learning models, you may be missing a major shift in how forecasting systems are built.

In this tutorial, we review five time series foundation models, selected based on performance, popularity measured by Hugging Face downloads, and real-world usability.

1. Chronos-2

Chronos-2 is a 120M-parameter, encoder-only time series foundation model built for zero-shot forecasting. It supports univariate, multivariate, and covariate-informed forecasting in a single architecture and delivers accurate multi-step probabilistic forecasts without task-specific training.

Key features:

- Encoder-only architecture inspired by T5

- Zero-shot forecasting with quantile outputs

- Native support for past and known future covariates

- Long context length up to 8,192 and forecast horizon up to 1,024

- Efficient CPU and GPU inference with high throughput

Use cases:

- Large-scale forecasting across many related time series

- Covariate-driven forecasting such as demand, energy, and pricing

- Rapid prototyping and production deployment without model training

Best use cases:

- Production forecasting systems

- Research and benchmarking

- Complex multivariate forecasting with covariates

2. TiRex

TiRex is a 35M-parameter pretrained time series forecasting model based on xLSTM, designed for zero-shot forecasting across both long and short horizons. It can generate accurate forecasts without any training on task-specific data and provides both point and probabilistic predictions out of the box.

Key features:

- Pretrained xLSTM-based architecture

- Zero-shot forecasting without dataset-specific training

- Point forecasts and quantile-based uncertainty estimates

- Strong performance on both long and short horizon benchmarks

- Optional CUDA acceleration for high-performance GPU inference

Use cases:

- Zero-shot forecasting for new or unseen time series datasets

- Long- and short-term forecasting in finance, energy, and operations

- Fast benchmarking and deployment without model training

3. TimesFM

TimesFM is a pretrained time series foundation model developed by Google Research for zero-shot forecasting. The open checkpoint timesfm-2.0-500m is a decoder-only model designed for univariate forecasting, supporting long historical contexts and flexible forecast horizons without task-specific training.

Key features:

- Decoder-only foundation model with a 500M-parameter checkpoint

- Zero-shot univariate time series forecasting

- Context length up to 2,048 time points, with support beyond training limits

- Flexible forecast horizons with optional frequency indicators

- Optimized for fast point forecasting at scale

Use cases:

- Large-scale univariate forecasting across diverse datasets

- Long-horizon forecasting for operational and infrastructure data

- Rapid experimentation and benchmarking without model training

4. IBM Granite TTM R2

Granite-TimeSeries-TTM-R2 is a family of compact, pretrained time series foundation models developed by IBM Research under the TinyTimeMixers (TTM) framework. Designed for multivariate forecasting, these models achieve strong zero-shot and few-shot performance despite having model sizes as small as 1M parameters, making them suitable for both research and resource-constrained environments.

Key features:

- Tiny pretrained models starting from 1M parameters

- Strong zero-shot and few-shot multivariate forecasting performance

- Focused models tailored to specific context and forecast lengths

- Fast inference and fine-tuning on a single GPU or CPU

- Support for exogenous variables and static categorical features

Use cases:

- Multivariate forecasting in low-resource or edge environments

- Zero-shot baselines with optional lightweight fine-tuning

- Fast deployment for operational forecasting with limited data

5. Toto Open Base 1

Toto-Open-Base-1.0 is a decoder-only time series foundation model designed for multivariate forecasting in observability and monitoring settings. It is optimized for high-dimensional, sparse, and non-stationary data and delivers strong zero-shot performance on large-scale benchmarks such as GIFT-Eval and BOOM.

Key features:

- Decoder-only transformer for flexible context and prediction lengths

- Zero-shot forecasting without fine-tuning

- Efficient handling of high-dimensional multivariate data

- Probabilistic forecasts using a Student-T mixture model

- Pretrained on over two trillion time series data points

Use cases:

- Observability and monitoring metrics forecasting

- High-dimensional system and infrastructure telemetry

- Zero-shot forecasting for large-scale, non-stationary time series

Summary

The table below compares the core characteristics of the time series foundation models discussed, focusing on model size, architecture, and forecasting capabilities.

| Model |

Parameters |

Architecture |

Forecasting Type |

Key Strengths |

| Chronos-2 |

120M |

Encoder-only |

Univariate, multivariate, probabilistic |

Strong zero-shot accuracy, long context and horizon, high inference throughput |

| TiRex |

35M |

xLSTM-based |

Univariate, probabilistic |

Lightweight model with strong short- and long-horizon performance |

| TimesFM |

500M |

Decoder-only |

Univariate, point forecasts |

Handles long contexts and flexible horizons at scale |

| Granite TimeSeries TTM-R2 |

1M–small |

Focused pretrained models |

Multivariate, point forecasts |

Extremely compact, fast inference, strong zero- and few-shot results |

| Toto Open Base 1 |

151M |

Decoder-only |

Multivariate, probabilistic |

Optimized for high-dimensional, non-stationary observability data |

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in technology management and a bachelor's degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.